People often ask me what I’m running as a test environment at home. Usually I try and borrow a Lab on-site at whichever client I may be working at, but sometimes you just need to test something quick or build a template without that sort of access.

Over the last while, I’ve put together some generic PC parts (read: cheap) for a home ESX server.

Currently at home, I also run a Windows Home Server for my personal photos, video and PC/Laptop backups. The WHS server runs on an Acer H340 (dual core ATOM CPU) which seems a little sluggish at times for transcoding streaming video at 1080p to my PS3 (using Java PS3 Media Server), so eventually I’ll be migrating that to a VM on this ESX box. It’s not likely that I’ll be watching something while I’m using the ESX box for work, so I can always suspend that VM while it’s not in use, or tag it as “Low Priority” so it won’t affect my other workloads.

Current Specs:

- ASUS H67 Motherboard (intel H67 chipset)

- Intel i5-2400 3.10Ghz, 6MB Cache Quad-Core CPU

- 8GB Patriot DDR3 PC-12800 (1600Mhz) Memory (2x4GB)

- ANTEC 430W Power Supply

- 6x 2.0TB Western Digital WD20EARS Hard Drives (64MB Cache)

- 2x Intel PRO/1000 GT Network Interface Cards (*See attempt 1)

ESX doesn’t support HostRAID style RAID adapters, so the onboard intel ICH RAID was not going to work. I figured I’d try to use a FreeNAS VM and present the storage using software RAID-z and NFS.

If you’re looking to see what whitebox hardware works with ESX, there is an excellent “HCL” put together for common PC equipment that works with ESX (obviously you won’t get support on this hardware). You can find the whitebox HCL here: http://www.vm-help.com/esx40i/esx40_whitebox_HCL.php

Attempt #1: ESXi 4.1U1 with FreeNAS VM for NFS storage

The idea here was to install ESXi 4.1 update 1, run ESX from a 1GB USB key, use a FreeNAS VM (attaching all 6 drives via RDM) and combine all storage into a single RAID-Z and present back to ESX via NFS.

ESX installed and ran fine from the USB key, but there were some issues with this setup:

1) ESXi 4 didn’t recognize the onboard RealTek NIC (*So i went out and got 2 Intel NICs, teaming is good anyway)

2) Presenting the SATA disks as RDMs to the VM had to be done manually and via the console using vmkfstools. I was able to use David Wurburton’s approach (Found here: http://blog.davidwarburton.net/2010/10/25/rdm-mapping-of-local-sata-storage-for-esxi/)

3) FreeNAS doesn’t support “vmxnet3”, so I’ll use “vmxnet2 (enhanced)”

4) NFS performance back to ESX was terrible. I was able to use the FreeNAS VM as storage from external machines with decent performance, but when when trying to use the NFS back to the ESX host on the same hardware, performance was terrible.

Ok, this performance is just too bad to use. Using a dd test from the FreeNAS console, the disk performance seems to be fine, but when presenting through to ESX over NFS and using esxtop to view a dd performed via ESX, i get about 9-10MB/s per drive tops. Time to try something else.

Attempt #2: ESXi 5.0 with FreeNAS VM for NFS

Ok, on to version 5. Same approach as before, but lets use ESXi 5.0 and a local disk for ESX instead of the USB key. There really wasn’t any reason to not use the USB key aside from my fears that it was an older key and may not stand the test of time. It was more of a proof of concept anyway (just to try it out) and it worked.

Some findings here:

1) ESXi 5.0 recognizes my RealTek onboard NIC (Yay!, now I have 3x1Gbps to work with)

2) NFS performance is still terrible (I wasn’t expecting much here, but it was worth a shot)

3) After reading several other blogs, it seems that iSCSI with FreeNAS is the way to go for ESX.

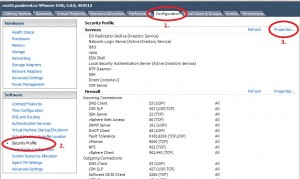

A nice thing with ESXi 5.0 is that you can gain SSH console access by a simple modification to the security profile through the GUI. All you need to do is Login via the vSphere client and:

- Click the “Configuration” tab on the ESX host

- Select the “Security Profile” configuration

- For the “Services” section click the “Properties…” Link

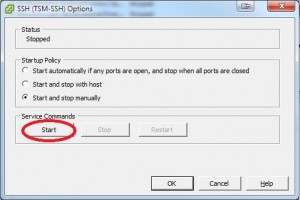

- Select “SSH” and clink the “Options…” button

- In the service commands section, click the start button.

The SSH service will remain active until you go back and click the “Stop” button, or restart the ESXi 5.0 host. I would recommend stopping the service once you are done with any SSH sessions.

Results:

“esxtop” during a “dd if=/dev/zero of=test.dd bs=1M count=2048” through the service console.

vmhba32 through vmhba36 are my 5 2.0TB WD drives. I stopped the dd after a few minutes and less then 112MB were written.

“esxtop” during the same dd command run through the FreeNAS VM console. 2GB took 22sec, resulting in about 96MB/swrite speed. I can live with that, so why is it so bad through ESX.

And another “esxtop” session while using a CIFS transfer from my desktop to the same location (same 2GB test file copied to, then from my desktop). Not the greatest through CIFS either, but that could be due to the network and/or the speed of my desktop. 60MB/s write isn’t terrible for a home server, but I should be seeing much better performance from the box internally.

Attempt #3: ESXi 5.0 with FreeNAS iSCSI

The iSCSI implementation on FreeNAS for RAID-z is a bit different. You need to setup a “volume dataset” on an existing RAID-z volume and present that as an iSCSI target. I was hoping to just present the whole RAID-z as a target, but it appears that isn’t possible in the current version of FreeNAS (v8.0.1). This seems like a lot of hoops if something were to ever go wrong with the setup … but it’s worth trying out for proof of concept.

“esxtop” during a dd write test on the ESX Service Console to the iSCSI volume. vmhba37 is the software iSCSI HBA.

The implementation of iSCSI with RAID-z on FreeNAS works, and the performance is a little better, but not as good as you would expect from a 5 drive RAID-z array. This time it seemed to be on par with the performance of the CIFS transfer above.

Clearly there is something wrong with the NFS implementation either in FreeNAS 8.0.1 itself, or something to do with my unique setup. Even though the performance is decent with iSCSI (50MB/s), it’s still not near the capable 90+MB/s we see from the FreeNAS VM console directly to the same disks. I attempted to add a 2nd NIC to the iSCSI group, but teaming isn’t allowed for ESXi software iSCSI. It shouldn’t make a difference though, since the network traffic should be all internal to the ESX host. There’s even a way to trick the iSCSI software HBA into not even having a physical NIC attached (ie, remove the physical NIC after setting it up).

The vmxnet2 driver for FreeNAS doesn’t support mtu adjustment, but I did try an intel 1000PRO virtual NIC with jumbo frames (set to mtu 9000) . The results were similar, no magic pill here.

Summary

From the FreeNAS VM console, I get 90+MB/s writes and excellent read MB/s while testing performance to the disk. Unfortunealtey this performance doesn’t translate well when presenting the same storage back to the ESX host running on the same physical hardware.

I see things top out at about 50MB/s while using this setup for iSCSI, while using NFS presented back to ESX seems completely unusable.

While disk performance may be acceptable using the iSCSI method to run some VMs, I don’t like the idea of taking a 50% performance cut by going with a software solution for RAID. There is also the complexity of this setup in the event that a failure does occur. While it’s not too bad to replace a disk in the FreeNAS VM, RAID-z setup, it’s a lot easier in a hardware RAID solution to just swap the disk and let the hardware rebuild itself.

It’s unfortunate that the ICH RAID provided on the intel chipset isn’t usable by ESX, but I think i’ll be looking for a hardware RAID-5 solution regardless. I need something I can trust my personal data with in addition to providing a test box for VM testing and template creation. I was hoping to avoid the extra cost by going with a software RAID solution, but it appears that either ESX or FreeNAS doesn’t handle this setup very well when combined on the same box.

-Paul

Have you, perchance, tried the same setup with OpenFiler instead?

Sorry for the late reply.

No I have not tried open filer. I since abandoned the attempt in favor of a hardware RAID solution for my whitebox ESX host.

Thanks,

Paul